- About

Getting to know us

- Services

- Managed Services

- Application Services

- Cloud Services

- Data Science

- Consulting Services

- Technology Solutions

Managed Services

Application Services

Data Science

Consulting Services

Technology Solutions

- Industries

- Resources

Resources

- Contact

- Contact us

Better Together: Oracle Database Compression for Oracle Database Partitioning

Contents

The best compression for OLTP workloads and Data Warehousing

Oracle compression at the table level, now called Advanced Row Compression, was first introduced in Oracle Database 11g. This powerful feature maintains compression at both the insert and update operations. Its internal mechanism minimises the overhead of write operations on compressed data making it suitable for both Data Warehouse (DW) and Online Transaction Processing (OLTP) type environments.

Oracle’s unique algorithm

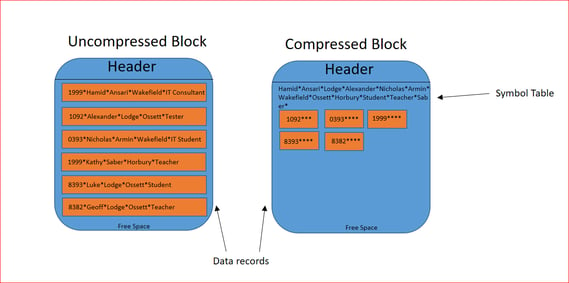

Oracle Advanced Compression uses a unique algorithm designed for both OLTP and DW applications. This process works on the block level by identifying duplicate data values using short references in an internal structure called a symbol table stored in the actual block itself. Compressed data is self-contained within the data block as the metadata used to translate compressed data into its original state is stored in the block header. This allows for a significant performance improvement by avoiding additional I/O when accessing compressed data. The diagram below shows an example of an uncompressed and compressed block.

In the above diagram, the data has been taken out of the block and put in a special area at the top of the block called a symbol table. The data in the column is assigned a symbol that takes the place of the actual data value. As symbols are smaller than the data values, the record sizes are much smaller than the original data. The more repeating data the row has, the more compact the symbol table and subsequently the block.

It is very important to note that the compression takes place as a triggering event and not when the row is inserted or updated. Therefore, there are no performance hits during normal DML operation. Oracle Advanced Compression can be applied to a complete table and all its partitions. You may have a single table or individual partitions that make up that target table. It is then possible to compress all or some of the partitions.

Advanced compression with partitioned tables

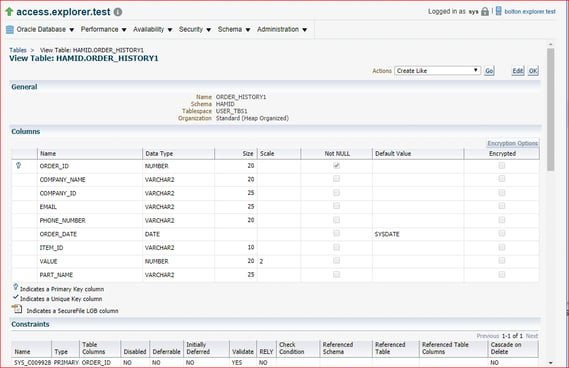

The following example discusses a practical example of a single large table with 106 million records that is first partitioned and then compressed using Oracle Advance Compression. Following the recommendations made by Oracle SQL Access Advisor, the table was partitioned using the range partition method on the column ORDER_DATE. The table resides in a tablespace containing a single data file.

SQL> desc order_history Name Null? Type ------------------------------------------------------------------------- -------- ----------- ORDER_ID NOT NULL NUMBER(20) COMPANY_NAME VARCHAR2(20) COMPANY_ID VARCHAR2(25) EMAIL VARCHAR2(25) PHONE_NUMBER VARCHAR2(20) ORDER_DATE DATE ITEM_ID VARCHAR2(10) VALUE NUMBER(20,2) PART_NAME vbarchar2(25)

After the table was partitioned, the structure was changed as follows;

The partitions were created based on the recommendation from the SQL Access Advisor. However, the partitions listed below are not compressed. The records were moved from the single large table to the newly partitioned table that was date ranged on all 12 months of the year 2019.

Select p.partition_name, p.tablespace_name, p.compression, p.compress_for, round(sum(p.num_rows / p.blocks),0) rows_per_block, sum(p.num_rows) num_rows, sum(p.blocks) blocks, round(sum(s.bytes) /(1024*1024)) MB from dba_tab_partitions p, dba_segments s where p.table_name = 'ORDER_HISTORY' and p.partition_name = s.partition_name and p.table_name = s.segment_name group by p.partition_name, p.tablespace_name, p.compression, p.compress_for order by 1; PARTITION_ TABLESPACE COMPRESS COMPRESS_F ROWS_PER_BLOCK NUM_ROWS BLOCKS MB ---------- ---------- -------- ---------- -------------- ---------- ---------- ---------- P10_2019 USER_TBS1 DISABLED 74 8028250 109037 856 P11_2019 USER_TBS1 DISABLED 74 7761989 104957 824 P12_2019 USER_TBS1 DISABLED 71 14586861 204116 1600 P1_2019 USER_TBS1 DISABLED 72 12984477 179564 1408 P2_2019 USER_TBS1 DISABLED 73 8033724 110057 864 P3_2019 USER_TBS1 DISABLED 74 8027748 109037 856 P4_2019 USER_TBS1 DISABLED 74 7764396 104957 824 P5_2019 USER_TBS1 DISABLED 74 8033735 109037 856 P6_2019 USER_TBS1 DISABLED 74 7761381 104957 824 P7_2019 USER_TBS1 DISABLED 74 7759863 104957 824 P8_2019 USER_TBS1 DISABLED 74 8027636 109037 856 P9_2019 USER_TBS1 DISABLED 74 7760389 104957 824

All the above partitions can all be compressed in one attempt or individually based on certain criteria such as a date value. The partition sizes can be seen above before the compression. We can compress the individual partitions online without any interruptions to the normal DML operations on this or other partitions. This can be a very CPU & I/O intensive operation. The typical command used can be one of the followings;

Alter table XXXXXXXXXX row store compress advanced; Online Redefinition (dbms_redefinition); Alter table XXXXXXXXXX move partition YYYY row store compress advanced; Alter table XXXXXXXXXX move partition YYYY online row store compress advanced;

Each of the above options has certain characteristics. However, the last option allows full read / write DML activity on the partition during the process and keeps all the indexes valid after the move operation is completed. These operations can be very CPU and I/O intensive depending on the size of the partition and the CPU utilisation on the server. It is best to carry out these activities at weekends or evenings in quite business periods. You may also work on one particular partition at a time.

Suitability of Data

The ratio of compression and disk storage saved very much depends on the cardinality of the data itself. The package DBMS_COMPRESSION advisor is used to estimate potential storage saving before implementing this functionality. After compressing all the partitions, we can see the results below. In this example an average ratio of 20% storage was gained after compression due to the cardinality of data. In other cases, you could see ratios of up to 90% storage gain.

Select p.partition_name, p.tablespace_name, p.compression, p.compress_for, round(sum(p.num_rows / p.blocks),0) rows_per_block, sum(p.num_rows) num_rows, sum(p.blocks) blocks, round(sum(s.bytes) /(1024*1024)) MB from dba_tab_partitions p, dba_segments s where p.table_name = 'ORDER_HISTORY' and p.partition_name = s.partition_name and p.table_name = s.segment_name group by p.partition_name, p.tablespace_name, p.compression, p.compress_for order by 1; PARTITION_ TABLESPACE COMPRESS COMPRESS_F ROWS_PER_BLOCK NUM_ROWS BLOCKS MB ---------- ---------- -------- ---------- -------------- ---------- ---------- ---------- P10_2019 USER_TBS2 ENABLED ADVANCED 86 8028250 93572 736 P11_2019 USER_TBS2 ENABLED ADVANCED 86 7761989 90440 712 P12_2019 USER_TBS2 ENABLED ADVANCED 87 14586861 168544 1317 P1_2019 USER_TBS2 ENABLED ADVANCED 87 12984477 148496 1160 P2_2019 USER_TBS2 ENABLED ADVANCED 87 8033724 92152 720 P3_2019 USER_TBS2 ENABLED ADVANCED 86 8027748 93600 731 P4_2019 USER_TBS2 ENABLED ADVANCED 86 7764396 90468 712 P5_2019 USER_TBS2 ENABLED ADVANCED 86 8033735 93648 732 P6_2019 USER_TBS2 ENABLED ADVANCED 86 7761381 90432 707 P7_2019 USER_TBS2 ENABLED ADVANCED 86 7759863 90398 712 P8_2019 USER_TBS2 ENABLED ADVANCED 86 8027636 93572 736 P9_2019 USER_TBS2 ENABLED ADVANCED 86 7760389 90396 712

It should also be noted that SQL read operations against the table partitions results in 50% less consistent gets when executed against a compressed partition compared to the same uncompressed partition.

Advanced compression for backups & standby

Oracle Advanced Compression can also be utilised with Oracle Data Pump and Oracle RMAN utilities, using the Basic, Low, Medium and High levels of compression levels. This allows for the final output to be compressed based on the options specified. Oracle Data Guard can also utilise Oracle Advanced Compression to compress the redo data before being sent to the standby database.

View what compression ratio you can achieve

The Oracle Enterprise Manager 13c Compression Summary screen below also provides a detailed breakdown of the database segments, the compression savings and the type of compression being used. Here we can see the compression in use by the target table.

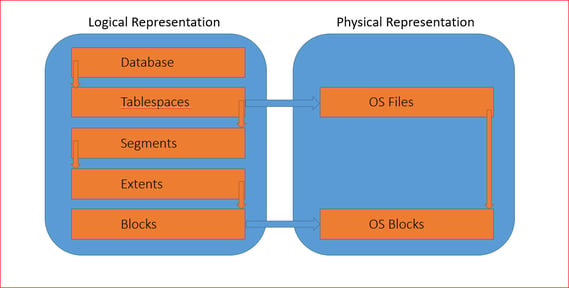

The importance of partition design

It is important to understand the logical and physical design of the partitions and the underlying file systems. The typical logical and physical of Oracle environments are shown below;

In this example, the table ORDER_HISTORY is divided into separate partitions covering different months of a year. New partitions are added for each new month. The logical partitions are still held within the single physical data file as shown below.

The above configuration can now be improved further by mapping each partition to a separate physical file, each on a separate file system, as shown below.

Save on storage costs

It is now possible to move individual data files that represent old and less frequently accessed partitions to a separate slower less expensive storage device (i.e. /u09/….). The more recent and frequently accessed partitions will remain on the existing fast and more expensive storage devices (i.e. /u03/…). The individual partitions can also be compressed with a different option while still providing full DML access without any interruption or I/O latency to the frontend applications. The older partitions can also be removed without any adverse effect on the rest of the table.

The above concept is a simple representation of a manual partitioning, compression and storage realignment. Oracle Automatic Data Optimization (ADO) functionality allows an automatic and intelligent approach to administering and maintaining compression and storage tiering. ADO uses a heat map to assess the space usage at the block and segment levels. It then uses this information to define the correct compression and storage polices.

Proof-of-Concept

The combination of Oracle Partitioning and Oracle Advanced Compression options makes for powerful database solutions when maintaining and optimising large volumes of data. As with any significant changes to a databases structure, it is imperative that this functionality is fully investigated and tested before being deployed in a production database environment.

DSP specialise in the management and optimisation of Oracle Database workloads, so if this is a focus that you would like to investigate further, please don’t hesitate to contact us, to explore this fully and perhaps discuss setting up a proof-of-concept for Oracle Advanced Compression with Partitioning.

Author: Hamid Ansari

Job Title: Technical Consultant

Bio: Hamid is a Technical consultant at DSP, he has a BA(Hons) in Data Processing / Business computing from the University of Sunderland and is a specialist in Oracle Database and associated technologies. He has over 25 years of working with Oracle RDMS starting with version 5.1. His main focus areas are Oracle GoldenGate, RAC, DataGuard, Engineered Systems and Oracle Cloud. He has worked across various companies in the Middle East, Europe, UK and US.

.png?width=250&name=stonewater-logo%20(1).png)

.webp?width=569&name=Oracles%20advanced%20compression%20with%20partitioned%20tables%20(1).webp)

.webp?width=1233&name=Importance%20of%20partition%20design%20(1).webp)

.webp?width=569&name=Importance%20of%20partition%20design%20(2).webp)