- About

Getting to know us

- Services

- Managed Services

- Application Services

- Cloud Services

- Data Science

- Consulting Services

- Technology Solutions

Managed Services

Application Services

Data Science

Consulting Services

Technology Solutions

- Industries

- Resources

Resources

- Contact

- Contact us

Exploring Autonomous Data Warehouse: Part 2

Contents

Now we have moved data into Cloud storage (see part 1) we want to move it into ADW. It sounds pretty simple (and to be fair it is) but I can guarantee that the first time you do it you may hit a few issues. So again, let’s look at a few things you need to do to enable this.

First of all you need to have a Cloud user with the AUTH token created and those details will be saved in ADW. I’m using the same user from the first blog to upload data into Cloud Storage. To upload data into Cloud storage I used API keys; for the allowing ADW to connect to Cloud Storage, you need AUTH tokens. AUTH tokens can be easily generated in the console but when you generate them it will create a specific password which will not be recorded anywhere. You need to generate and have your procedure ready to create the Cloud credential. In ADW do the following.

BEGIN DBMS_CLOUD.CREATE_CREDENTIAL( credential_name => 'OACADW_CRED', username => 'oacadwcreds', password => '<AUTHTOKENPASSWORD>'

);

END;

/

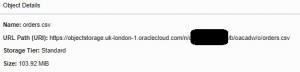

The next step is to try and upload some test data in Cloud storage; I would recommend testing this with a file with a few records in to ensure you have all the elements in place like the access and object URL. When you’re in Cloud Storage bucket you can click the three dots to find the URL of the file you want to load into ADW or query via an External Table.

You have two options with DBMS_CLOUD to start to look at data; create an External Table or use the COPY_DATA procedure to copy the data into an existing table. When you start to do this you will see errors like this:

ORA-29913: error in executing ODCIEXTTABLEFETCH callout ORA-30653: reject limit reached

- 00000 - "error in executing %s callout"

*Cause: The execution of the specified callout caused an error. *Action: Examine the error messages take appropriate action.

OR

Error report - ORA-20000: ORA-29400: data cartridge error KUP-04020: found record longer than buffer size supported, 10002432, in https://objectstorage.uk-london-1.oraclecloud.com/n/XXXXXXX/b/oacadw/o/orders.csv (offset=0) ORA-06512: at "C##CLOUD$SERVICE.DBMS_CLOUD", line 562 ORA-06512: at "C##CLOUD$SERVICE.DBMS_CLOUD", line 1008 ORA-06512: at "C##CLOUD$SERVICE.DBMS_CLOUD", line 1031 ORA-06512: at line 2

The key to using either the External Table method or COPY_DATA was the use of the formatting flags in the procedure. After some tweaking this combination seemed to work best in terms of getting data into ADW via either method; BUT you need to obviously do data validation if you’re migrating data between platforms and using Cloud Storage as a middle man.

json_object('delimiter' value ',' , 'ignoremissingcolumns' value 'true' , 'blankasnull' value 'true', 'rejectlimit' value '10')

Another thing to watch for is the data you’re loading; for example if the CSV file has a column description as the first row and you load that data into a table with any NUMBER values it will be rejected immediately. Quite rightly the rejectlimit is defaulted to 0.

order_id,user_id,eval_set,order_number,order_dow,order_hour_of_day,days_since_prior_order

2539329,1,prior,1,2,08, 2398795,1,prior,2,3,07,15.0

When using the COPY_DATA procedure it will also generate some logging tables; COPY$n_LOG and COPY$n_BAD to help you troubleshoot the issues. I think the other thing worth mentioning is that the Cloud storage doesn’t have to be publicly available to do this; so if you’re hitting access issues, don’t switch your bucket to public thinking it will fix the issue. Here is an example:

CREATE TABLE ORDERS

( order_id number, user_id number, eval_set varchar2(100), order_number number, order_dow number, order_hour_of_day number, days_since_prior_order number (30,20));

BEGIN DBMS_CLOUD.CREATE_CREDENTIAL( credential_name => 'ADWOAC', username => 'OCI-USER', password => 'AUTHTOKEN'

);

END;

/

BEGIN DBMS_CLOUD.COPY_DATA( table_name => 'ORDERS', credential_name => 'ADWOAC', file_uri_list => 'https://objectstorage.uk-london-1.oraclecloud.com/n/TENANCY/b/BUCKET/o/orders1.csv', format => json_object('delimiter' value ',' , 'ignoremissingcolumns' value 'true', 'blankasnull' value 'true', 'rejectlimit' value '1')

);

END;

/

Sample CSV

2539329,1,prior,1,2,08, 2398795,1,prior,2,3,07,15.0

Read on to Part 3: Using DataPump to move data In and Out of ADW

.png?width=250&name=stonewater-logo%20(1).png)